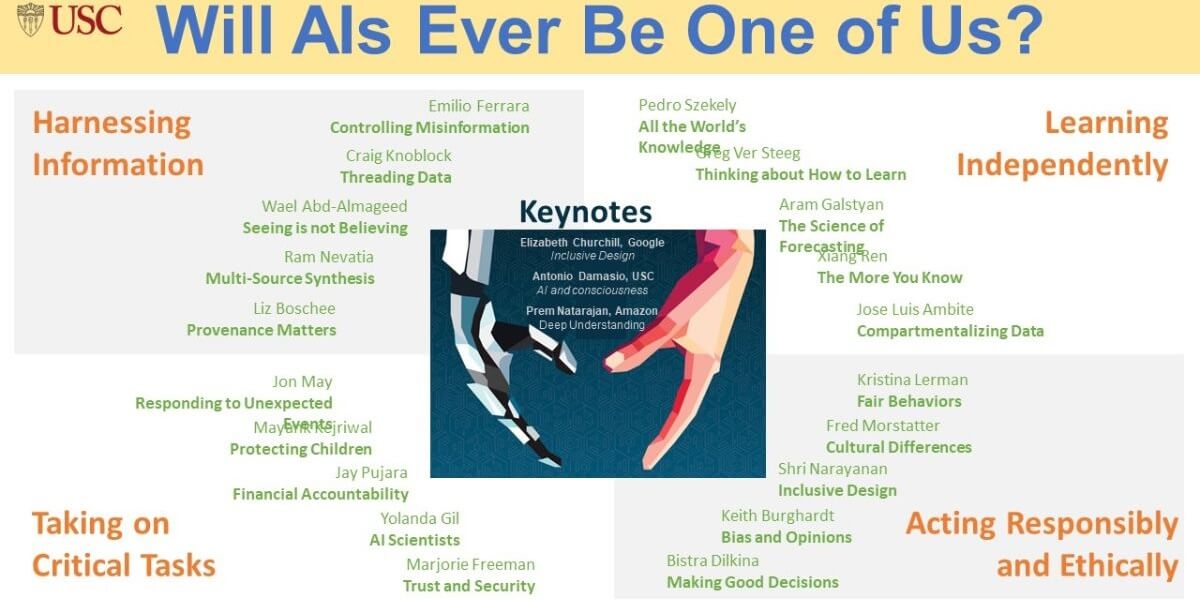

Symposium on the Future of AI: Will AIs Ever Be One of Us?

We all interact with artificial intelligence, or AI systems, but they don’t interact with people in the same way that we interact with one another. They know very little, do very little, and learn very little compared to humans.

Imagine a future where AI systems are much more knowledgeable about the world, quickly learn to adapt to our preferences and can be trusted with critical tasks that go beyond just entertainment or shopping. When will we be able to communicate with AI systems in the same ways that we do with other people?

On January 12-13, 2021, researchers gathered at a virtual USC AI Futures Symposium to share their insights on these topics.

Yolanda Gil, Chair of the 2021 USC AI Futures Symposium

“We were very excited that over 300 people from all around the world joined us to learn about USC research in human-AI interaction, which is quite a remarkable number of participants for a virtual meeting and attests to the stature of USC in AI research,” said Yolanda Gil, director for major strategic AI and data science initiatives at USC’s Information Sciences Institute (ISI) and USC Viterbi research professor of computer science, who chaired the symposium.

AI at USC

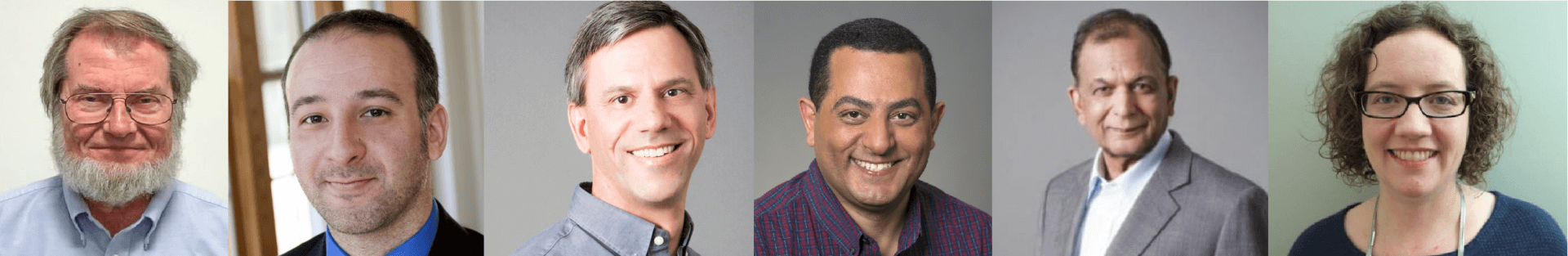

L-R: Yannis Yortsos, Cyrus Shahabi, and Craig Knoblock

Yannis Yortsos, dean of the USC Viterbi School of Engineering, presented in the opening session. “AI is a strategic priority for USC, endorsed by our new President Carol Folt and Provost Chip Zukoski,” he emphasized. “The very rich, interdisciplinary environment of USC is ideally suited for its development across all disciplines and all endeavors.”

This sentiment was echoed by Cyrus Shahabi, chair of the USC Department of Computer Science, who noted that human-AI interaction is a major AI strength at USC and will be the theme of the new department home in the Dr. Allen and Charlotte Ginsburg Human-Centered Computation Hall. Following that, Craig Knoblock, the Keston Executive Director of USC ISI and research professor of computer science, highlighted the innovative research directions being pursued by USC researchers in this area, including commonsense reasoning, cultural adaptation of dialogue, misinformation, knowledge-rich learning and responsible decision making.

Knowledge About the World

L-R: Ralph Weischedel, Emilio Ferrara, Craig Knoblock, Wael AbdAlmageed, Ram Nevatia and Liz Boschee

A session in the symposium was devoted to new research on AI systems that incorporate extensive knowledge about the world. The discussion was led by Ralph Weischedel, research team leader at ISI, and the speakers were Emilio Ferrara, associate professor of communication and computer science and research team leader at ISI; Craig Knoblock; Wael AbdAlmageed, research associate professor; Ram Nevatia, professor of computer science; and Liz Boschee, director at ISI Boston.

Though humans easily acquire common sense from an early age, it has been a notoriously challenging skill for AI to fully grasp. USC is a pioneer in AI research which extracts this type of knowledge from documents, images and special sources like Wikipedia. While there have been significant advances in extracting individual statements, integrating all those statements into a usable knowledge source and using them to improve the performance of AI systems remains a major challenge.

Furthermore, new insights on detecting and handling misinformation were presented in this session. On social media platforms, it’s essential to distinguish bots from humans as to alert people to misinformation. Multimedia forensics can give reassurance of the integrity and authenticity of images and videos we see online that may be impersonating others — also known as deep fakes. Sometimes, AI systems simply misunderstand information, and qualifying the origins of any given data can help determine if it can be trusted.

Acting Responsibly and Ethically

L-R: Sven Koenig, Kristina Lerman, Fred Morstatter, Shri Narayanan, Keith Burghardt and Bistra Dilkina

Not long after George Bekey, USC computer science emeritus professor, published a pioneering book on “Robot Ethics” in 2011, we can already see that ethics concerns have gradually taken center stage in the world of AI research.

This discussion was led by Sven Koenig, professor of computer science, and the USC experts who spoke on this topic were Kristina Lerman, research professor of computer science and research team leader at ISI; Fred Morstatter, research assistant professor of computer science and research lead at ISI; Shri Narayanan, professor of electrical and computer engineering and research director at ISI; Keith Burghardt, computer scientist at ISI; and Bistra Dilkina, associate professor of computer science.

New techniques were presented for identifying and measuring biases in data and understanding their sources in order to mitigate them. Algorithms for ranking, which are used in product recommendations and for search, have long been known to have biases due to popularity or positioning, and new findings show that they can be addressed by reducing instability under different conditions. AI systems can also help reduce biases in media by measuring factors such as on-screen time and speaking time for various demographic groups which expose opportunities to improve equality and diversity.

Inclusiveness and equity are also central topics in AI ethics. Inclusive design of AI systems leads to more equitable experiences that take into account individual differences, particularly in children at different developmental stages. Cultural differences can be identified by observing communications among large groups, with promising results based on analyses of open, online collaborative projects from different countries.

Acting responsibly often involves consideration of resource limitations and maximizing impact. Several novel AI techniques were found to be effective for large-scale optimization and machine learning, particularly when learning is informed by downstream decision processes.

Learning Independently

L-R: Yan Liu, Pedro Szekely, Greg Van Steeg, Aram Galstyan, Xiang Ren, Jose-Luis Ambite

Though growing at an unprecedented rate, AI systems have a long way to go before they can be on par with human abilities to learn continuously and independently. This session was led by Yan Liu, professor of computer science, and the speakers who addressed this topic included Pedro Szekely, research associate professor in computer science and research director at ISI; Greg Van Steeg, research associate professor in computer science and research lead at ISI; Aram Galstyan, director of the Artificial Intelligence Division at ISI and research associate professor in computer science; Xiang Ren, assistant professor of computer science and research lead at ISI; and Jose-Luis Ambite, research associate professor in computer science and research team leader at ISI.

The world is constantly changing, which means our knowledge needs to be up to speed. New research on self-supervised machine learning is opening new doors to using data that has not been augmented with labels, which is what most machine learning approaches require. Studying the effects on continuous learning shows that AI systems can learn to automatically refresh their beliefs about the world. Another strategy that has been shown to be very effective is distributed federated learning approach, which is used when data is collected but not publicly accessible, as is the case with health and other sensitive data.

As powerful as AI can be, they still need our guidance. New approaches that combine machine learning with human predictions have led to improved forecasting of trends and events. Incorporating human explanations and rationale have also improved machine learning significantly.

AI for Critical Tasks

L-R: Pedro Szekely, Jon May, Mayank Kejriwal, Jay Pujara, Yolanda Gil and Marjorie Freedman

USC has a long tradition of tackling problems of societal importance through human-AI interaction. A session was devoted to highlight this work, and was chaired by Pedro Szekely. This session included speakers Jon May, research assistant professor in computer science and research lead at ISI; Mayank Kejriwal, research assistant professor of industrial and systems engineering and research lead at ISI; Jay Pujara, research assistant professor in computer science and research lead at ISI; Yolanda Gil; and Marjorie Freedman, research team leader at ISI.

In times of natural disasters, victims and local first responders communicate in their own languages, which creates barriers for aid providing NGOs and other international organizations. AI technologies that are able to quickly create automated translators for regional languages are radically changing the ability to support emergency response and humanitarian aid.

Protecting runaway children from forced prostitution is often difficult due to worldwide commercial sex trafficking through online advertising and transactions that are difficult to track. Fortunately, significant progress is being made through AI technologies which automatically identify and crawl suspicious sites to detect patterns and trends, thereby generating leads and evidence for law enforcement.

Under-resourced entrepreneurs cannot easily develop solid business plans because of the difficulties in getting comprehensive knowledge about prospective competitors, customers, and existing technologies most relevant to their idea. Statistically, over 50% of new businesses fail within the first five years. A special customized market intelligence can be delivered through AI systems that automatically integrates years of data for hundreds of thousands of companies collected from webpages, patent filings, spreadsheets, social media, and regulatory filings with government agencies.

Furthermore, AI scientists could accelerate research by automatically analyzing the wealth of data that is now available in the sciences. AI algorithms for systematic search lead to better solutions by quickly exposing any inconsistencies in findings. AI techniques also reduce the time to create new models for complex systems. AI systems can assist and ultimately collaborate with scientists to accelerate discoveries.

Lastly, privacy and security can be significantly enhanced with AI approaches. For example, social engineering attackers (known as spear-phishing) can be deterred if the cost of an attack is heightened when they are engaged by AI systems instead of by the intended humans. Many other AI approaches are being applied to secure financial transactions, detect and fix code vulnerabilities, and predicting and detecting attacks.

Other Perspectives on Human-AI Interaction

L-R: Elizabeth Churchill, Antonio Damasio, Prem Natarajan

Two keynote speakers from the industry discussed significant challenges in human-AI interaction. Elizabeth Churchill, director of user experience at Google and an expert in the design of interactive technologies, made the provocative statement that “systems create the conditions under which humans make errors,” but the nature of those errors cannot be anticipated because every person is different. Prem Natarajan, vice president of Amazon’s Alexa, talked about adapting AI systems by teaching them new concepts when the need arises during interactions.

A third keynote speaker was Antonio Damasio, USC professor of neuroscience, psychology and philosophy, who discussed consciousness in terms of how behavior is governed by emotional states (e.g., fear) grounded in feelings which are directly linked to biological states (e.g., pain, hunger). He posed the intriguing question of whether consciousness will be relevant for the design of artificial intelligence systems grounded in physical sensors.

AI is Now

Maja Matarić

The closing session of the symposium featured Maja Matarić, USC interim vice president of research and professor of computer science. She encouraged the AI community to consider the use of AI for major societal challenges. She said that AI must be embraced by major institutions and their operations all the way down: how we’re distributing vaccines, how we’re communicating about mask-wearing and how we’re approaching equity.

As Matarić succinctly stated, “AI is not for the future — AI is now.”

For more information about the event, including videos of the sessions, click here.

Published on March 1st, 2021

Last updated on April 8th, 2021