ISI researchers train artificial intelligence models to consider common-sense when generating responses

Artificial intelligence (AI) is becoming the backbone of society and life as we know it. However, a gap remains between the way humans think and how artificial intelligence proceeds. Most language models are taught to take the information they are provided with, then produce a response based on their stored knowledge. Humans also factor in common sense, mutual beliefs, and implicit knowledge gathered from real-life experiences or incidental activities when having conversations.

Those elements change the interpretation of what we are saying but are not factored in by current AI models, therefore they do not provide accurate, human-like responses. Speaking with virtual assistants can be frustrating at times if they do not understand what you are intending to ask or provides a mediocre or incorrect response to your request. Imagine never having to worry about that communication barrier again. Bridging the gap between the way humans communicate and generate responses and the way artificial intelligence models do would be a huge development for the future of technology and AI’s role in society. The way Siri, Amazon Alexa, your car, and any other machine that is conversing with you will have a stronger communication connection and will be able to provide more accurate responses.

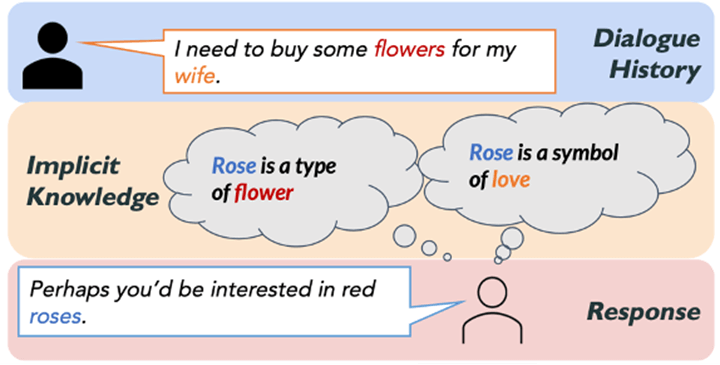

“One example we used in the paper is telling a conversation partner that you want to buy flowers for your wife. Humans naturally understand that buying flowers is an act of love, and that roses are a type of flower associated with love. Our research trained dialogue agents to be more human-like by generating these types of common sense thoughts and speaking more meaningfully, by suggesting buying roses,” explained Jay Pujara, research lead at ISI and co author on this study.

This is an example of humans making implicit inferences based on background knowledge when conversing. Without the implicit knowledge of what a rose is and what roses are symbolic of in everyday life, the response would not have been the same.

This work led by Pei Zhou, Ph.D. candidate at ISI, “Think Before You Speak: Explicitly Generating Implicit Commonsense Knowledge for Response Generation” was accepted in ACL 2022 (60th Annual Meeting of the Association for Computational Linguistics). It used self-talk models, rather than traditional end-to-end models which do not account for implicit knowledge, to test whether applying implicit knowledge as a factor improves the accuracy of AI-generated responses. The inspiration for this study comes from author Pei Zhou’s previous work on improving human-machine communication. “The important part Pei picked up as one of the main angles for his research was to study the role of common sense in human-machine communication. Current models lack common sense knowledge, they are not capable of making inferences the way humans do”, said Dr. Xiang Ren, research team lead at ISI and assistant professor, co-author on this paper.

Ren also stated, “We wanted to see if it would benefit the models as well as humans if they had the ability to mimic the same thought process as humans do.” Turns out…they do.

The study proved that when artificial intelligence models are given the tools to think in a similar way to humans, they create more of their own common sense. Ren explained, “by explicitly telling the model what common knowledge is useful to the current conversation, the model produces more engaging and natural responses”. Some may assume that the models already have their own common sense, however, these results show that giving the models common sense knowledge creates more human-like and sensible responses.

Pei Zhou discussed the results of the study as well, touching on other factors, besides response quality, like how it positively impacted the abilities of the self-talk models. Once the models were given generated knowledge from a common-sense database, the models were now creating their own thought process: with only implicit information given at the source, they were able to generate new common-sense knowledge.

The more trained and accurate artificial intelligence is to real human characteristics and thought processes, the more we can utilize artificial intelligence as a tool for advancing technology and having more human-like interactions with programs in which you are conversing with artificial intelligence instead of a real person.

Authors: Pei Zhou, Karthik Gopalakrishnan, Behnam Hedayatnia, Seokhwan Kim, Jay Pujara, Xiang Ren, Yang Liu, Dilek Hakkani-Tur. This work was undertaken with support from DARPA’s Machine Common Sense Program, Amazon, and Google.

Published on June 7th, 2022

Last updated on June 7th, 2022