Latest News

ISI Researchers Pioneer Developments In Protein Data Bank

ISI researchers are part of a core team developing the PDB-Dev repository, initiated by the Protein Data Bank, a foundational repository for macromolecular structures

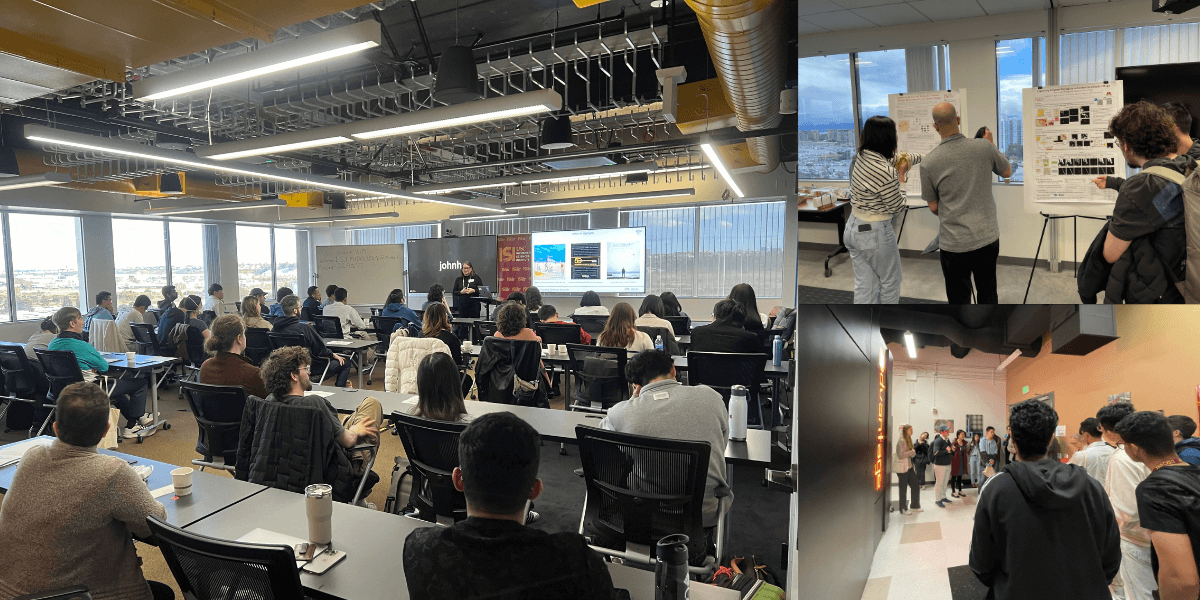

Inside ISI’s Ph.D. Visit Day 2024

Prospective graduate students traveled to ISI’s Marina Del Rey headquarters to experience the institute’s academic environment.

To “Howdy,” or Not to “Howdy,” That Is the Question

USC researchers explore the impact of prompt design on large language model performance.