Using Computer Generated Images to Create Unbiased Facial Recognition

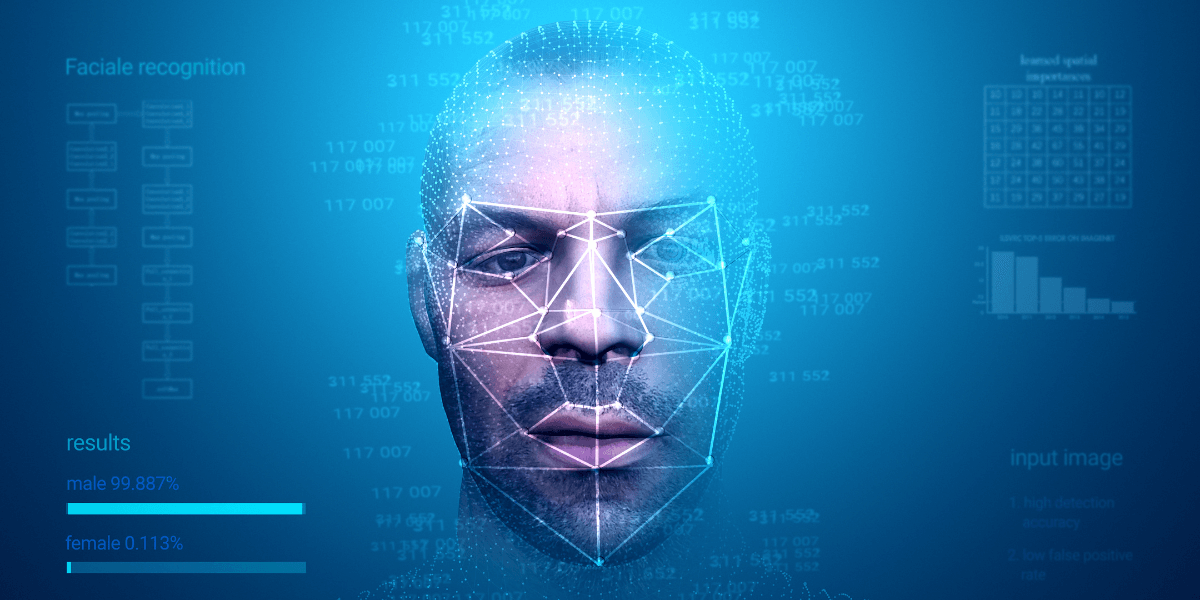

Facial recognition used to border on science fiction, a dramatic tool mostly consigned to spy movies and police procedural television shows. Discovering a person’s identity based on just their photo and a search engine seemed more like fantasy than fact.

However, technology grows more and more advanced every year, and facial recognition software has pervaded everyday life. As your face is used to unlock your phone and even pay your restaurant bill, the software that makes that possible is often riddled with weaknesses and can make critical mistakes. This is especially true if you are a member of one or more minority groups, which software is notoriously bad at identifying. Not only can this be unfair and aggravating, but a bias based on one of the government’s defined protected characteristics (such as race, gender, or disability status) can violate federal law.

While the government has not yet put forth any regulations on facial recognition software, researchers are scrambling to find the best way to remove bias from these programs. In his work at USC Information Sciences Institute (ISI), graduate research assistant Jiazhi Li has found a novel approach to the creation of equitable, unbiased programs.

In his 2022 paper, titled “CAT: Controllable Attribute Translation for Fair Facial Attribute Classification,” Li breaks from traditional methods of mitigating bias in facial recognition software. Typically, researchers test a program using a set of existing photographs of real people’s faces. They then observe correlations within the results that may indicate bias, such as people from a specific identity group being disproportionately identified as possessing a facial attribute.

While this tactic is moderately successful, it does not cover all possible types of bias. Sometimes the problem lies within the sample set of photographs used to calibrate the software. People in minority groups, including both protected classes and those with rarer attributes like red hair, will be generally underrepresented in the sample dataset. Unfortunately, academic departments are limited in their ability to gather sample photographs for many reasons, including violations of privacy.

Li, with the help of Wael AbdAlmageed, USC ISI Research Director & Associate Professor of Electrical and Computer Engineering, developed a method to fill in the gaps in the dataset: artificially generating new images. If the dataset is lacking in subjects with blond hair, Li’s program can simply create more. “We were able to create synthetic training datasets that, combined with real data, contain a balanced number of examples of facial images with different attributes (e.g. age, sex, and skin color),” explains AbdAlmageed.

By creating synthetic computer-generated photographs that contain less common features, the program can learn to analyze data with a far less substantial bias because the sample photos have even amounts of all attributes.

During his research, Li was pleased to discover that the programs were able to learn with synthetic images just as well as they learned with real samples.

Because this method relies on an automatic system to generate images instead of researchers individually creating them, Li believes that it is scalable to use in many applications, with many types of data sets. “Entities (e.g. research groups and companies) that develop face recognition algorithms could use this technology to synthetically balance their training data such that the final algorithm is fairer to minorities,” hopes AbdAlmageed. The method should also be applicable to all types of facial attributes, not just the ones discussed in Li’s paper. The next step for this work would be to “extend the research such that AI algorithms are not sensitive to the small number of examples of minorities, without having to augment the dataset,” concludes AbdAlmageed.

Li’s research was funded in part by the Office of the Director of National Intelligence’s (ODNI) Intelligence Advanced Research Projects Activity (IARPA). Li presented his research at the 2022 European Conference on Computer Vision (ECCV) Workshop on Vision With Biased or Scarce Data (VBSD).

Published on January 3rd, 2023

Last updated on May 16th, 2024